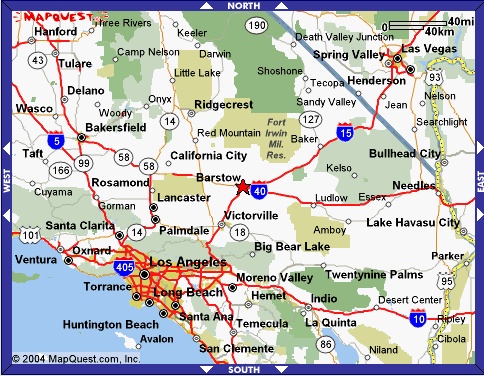

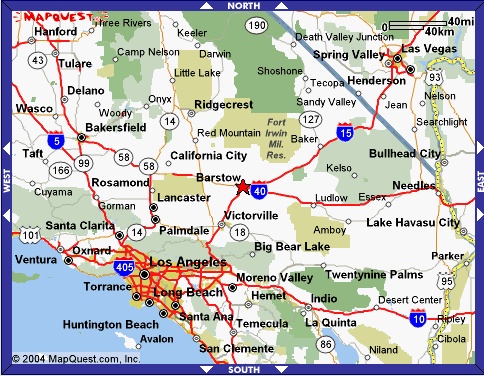

In these 2-D plots (click for larger versions), we simply plot the waypoints longitude(X) and latitude(Y) as small "x"s (left) and connected as a polygonal line (right). The start of the race is in the lower-left and the end at the upper-right. Note that when plotting the entire course, the waypoints are too dense to fully appreciate their separation. We will remedy this by zooming into particular regions, then by doing a movie of a simulated drive along the path. But first, we want to create a 3-D visualization of the path, using glyphs to represent both the maximum speeds and the boundary limits.

First we plot some simple (orange) glyphs as width lines perpendicular to the path that show the allowed boundary of a vehicle. (Click on image for enlarged version).

Next, we add some (cyan) cylindrical glyphs to represent the maximum speeds allowed at each waypoint. Note that the glyphs are scaled (in both height and radius) according to max speed. (The images are rendered in a perspective view, which means that in the top-down view you're seeing part of the sides of the cylinders).

We also experimented with fitting splines through the waypoints, but this really gets to be a bigger question of determining the proper points to interpolate, based upon the waypoints and the allowed widths. I still like the idea of using splines to help determine speed/acceleration.

Finally, we overlay satellite images from TerraServer.